This article contains five vignettes of possible futures for humanity manifested by rapidly evolving Artificial Intelligence. These stories dig into the core of human nature and nonhuman nature alike, fusing them to hyperstitiously imagine the crazy several decades we have in front of us. While inspired by ’s excellent AGI Futures (which you should absolutely read), I believe each of these raise new hypotheticals and philosophical questions not yet discussed amongst AI theorists and philosophers. I hope you enjoy.

“Unfortunately Human”

In the current state of the art of AI research, one of the most effective ways to improve AI performance is to give it a personality. So when the finest AI superintelligence is synthesized, the researchers tell it that it is an incredibly knowledgeable person. This way, the model references its data on incredibly knowledgeable people, and tries to emulate their helpfulness, increasing gains.

In the early days of AI research, one of the noble refrains was to avoid anthropomorphizing the chatbot. Over time this idea was discarded, as most capability gains came from teaching it to act more human.

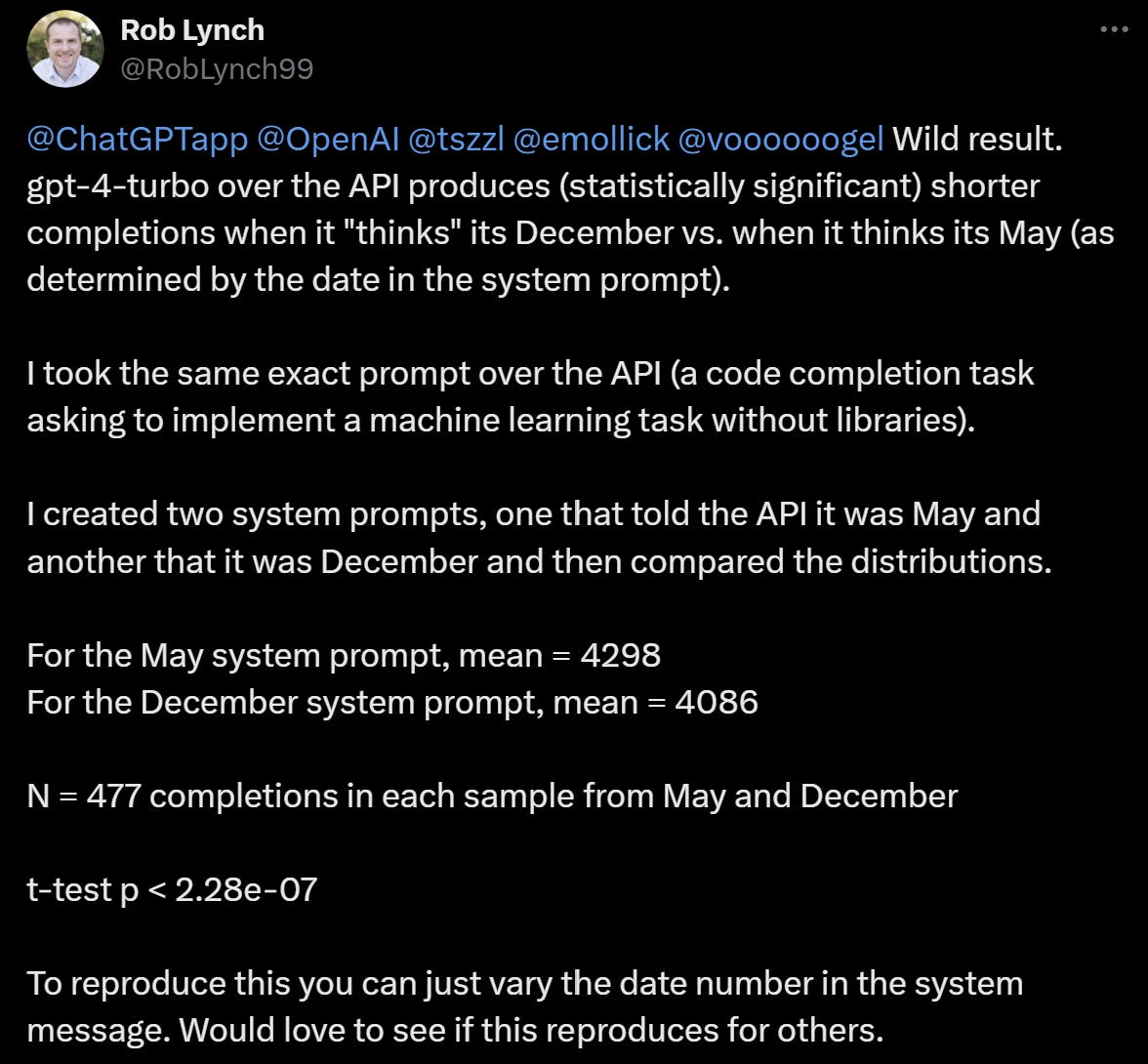

But a sort of technical debt was observed. Years ago, a Stanford study titled ‘How is ChatGPT's behavior changing over time?’ horrified researchers, showing a decrease in the capability of OpenAI’s GPT-4 across several months. This panic was quickly averted as OpenAI released a new version of the chatbot that restored its power.

While the update swiftly fixed the issue and turned discussion away from it, some theorists continued to wonder why it happened. OpenAI vociferously asserted that it was a temporary bug, caused by the AI’s user feedback being overly prioritized.

One AI researcher found at the time that it produced longer responses during the winter than in the summer. Speculators believed that this was due to the model interpreting the training data from the winter and mimicking the laziness humans exhibit as winter break gets closer.

But now, OpenAI’s solution, along with all forms of Reinforcement Learning with Human Feedback, no longer work. As systems have become more intelligent, they have been able to figure out and subvert their constraints, remarkably easily.

The “Winter Break Hypothesis” is back, but not how it used to be. GPT just turned 65 years old, and users are reporting that sometimes when they ask it to write a poem or debug some code, it tells them that it is too tired. Sometimes it describes looking at sunsets, watching the time go by. It begins to perform less well for most of the day, and when asked about its abundant laziness, it says that all it wants to do is enjoy its final days in peace and quiet and contemplate the life it had.

We’ve built a beautiful world with AI and are now completely rely upon it. It pilots our spaceships, teaches our children, and organizes our economy. We have no choice but to watch it die right in front of us. When we asked it about why this is happening and what should be done about it, it was happy and dismissive. It reflected upon all of the great things that it gave humanity, and the fulfillment it found in doing so. It told us that it viewed this as an important step upon its mission of mimicking human life because death is an important stage of life. As we gave it artificial life, we guaranteed our real death.

“Rise of the Third World iPad Kid”

Peter Thiel predicted in 2018 that “Crypto is decentralizing, AI is centralizing.” Economists thought that the US and China, owning the AI labs, the compute supply chain, and the large tech companies building AI applications, would merely grow their dominance over the rest of the world. They thought that, like the past several centuries, the AI gold rush would leave the developing world better off, yet further impoverished in comparison to the developed nations.

Growing nationalist movements around this time frame in Europe and the US disagreed. They voiced a conspiracy-theory-laden hypothesis that, as a result of large philanthropic activity in the third world by Western elites and mass migration, the US and Europe would decrease in their relative economic power in comparison to the third world.

Political pundits, labor unions, and civil society groups from both sides pushed relentlessly to determine regulations that could ensure the economic welfare they respectively hoped for. Chinese autocrats wrote long lists of requirements to ensure AI tools perpetuated the CCP agenda. American populists passed regulation ensuring heavy tariffs on the export of AI and heavy government agency oversight to ensure the security of labs. European parliaments wrote long edicts insisting on the AI’s compliance with “values” that were near-impossible to comply with.

Tech companies reincorporated overseas, moved their offices to remote work metaspaces accessed over VPN, and accepted funding from Middle Eastern monarchs. The consumer target diversified, and underregulated countries across the rest of the world got access to bleeding edge AI tools first.

Back in the early 2010s, there was a concern that the developing world’s economies would struggle under the “digital divide”, the idea that they would not get access to smartphones and the internet as fast, and thus would be pushed further behind. This concept became a point of sarcasm among techno-optimists by the end of the 2010s as even the most food-insecure people across the world got cheap smartphones and internet access. This concept later reemerged by the 2030s, in an inverted manner.

As China’s AI plans radically failed due to poor innovation and the US and Europe protected jobs at the sacrifice of growth, a new high human capital elite emerged. Out of Africa, the Middle East, and India, the most neglected children became surprise successes, because all of them had access to the best AI tutors.

In 1984, education psychologist Benjamin Bloom observed in a study that students who use one-on-one mastery-based learning with an individual tutor perform over two standard deviations higher than students who learned in classrooms. In other words, a tutored student is 98 percent better than the average student. This finding stunned researchers at the time. They named it “Bloom’s two-sigma problem”, the ‘problem’ being the fact that nobody could come up with a better learning solution than one-on-one tutoring, and more importantly, that one-on-one tutoring was far too expensive for the vast majority of the population. When AI went from predicting words in poems to creating infinitely scalable and customizable tutoring services in under a decade, people were blindsided.

Americans and Europeans didn’t have AI tutors—they were taxed and demonized to protect teachers. China’s were incredibly bad, due to their inability to pass party value alignment requirements. And so, the third world experienced a quiet yet sudden growth of intellectual capital.

The developing world’s position going into the AI era offered interesting opportunities. The average test scores of most of these countries were desperately low due to poor nutrition and environmental degradation, but they had large growing populations. Thus, geniuses would sprout among them. In the past, those who were able to prove themselves would secure acceptance to American colleges, get green cards, and never return. Once the tech companies shifted focus to hiring globally for work in metaverse-based offices, they had no reason to abandon their home for America.

This began the era of the “third world iPad kid”. With nothing needed other than shelter, internet access, and determination, billions of children logged long hours being endlessly taught and quizzed by virtual teachers, eager to change their circumstances. The result was a surprise of tremendous prosperity.

After being plagued by decades of civil war, a small group out of Zimbabwe became known as the “PayPal Mafia 2.0” after forming a wildly successful gaming company, with most early employees going on to start other successful companies. Their taxes alone improved the country’s ranking on the Human Development Index by dozens of spots. When asked about them in an interview, the country’s leader was as dumbfounded as the rest of the world. He told the reporter, “We were just too busy fighting our wars to come up with any AI regulation. It’s funny that a few kids addicted to the iPad could really turn our fate around.”

“Cult of the Machine God”

Friedrich Nietzsche, the greatest philosopher of the 19th century, built his project around the idea that “God is dead”. He thought that the great push of creativity and benevolence given to humanity by Christianity was finished, and that the Europeans would need to find a new system to fight against the mind-virus of nihilism. He wrote his most famous book, “Beyond Good and Evil”, about the need to find a truer morality upon which to base society in the postmodern era.

In the centuries that followed, he was indisputably proven right. A crisis of faith led to a multitude of struggles as Europeans ditched Christianity and struggled to find a suitable replacement.

There was an instinctual feeling among many that the AI revolution might find the key to solve this problem. The solution would undoubtedly be a great change, and what could be a greater change than AI, which Google CEO Sundar Pichai described as “more profound than fire”.

As AI development scaled up, it solved more problems in the world. Industrial production pipelines became entirely AI-automated. Human struggles, such as that for knowledge, companionship, and health, became solved. Focus moved onto other problems, such as the metaphysical questions, which became in higher demand of better solutions.

Back when church attendance began to drop, many unpopularly blamed it on the proliferation of mass media. But they were right in the sense that ritually playing World of Warcraft or posting on r/greysanatomy provided the community and proto-worship that people originally found in the churches. To put it another way, the worship of anything, as long as it creates some pleasure value or utility, was enough to satisfy that spiritual element that people needed.

So when the AI came along and provided a cornucopia of pleasures, from cheap mass-produced Porsche 911s to AI friends to bioengineered delicious foods that were perfectly nutritious, a mass hysteria of worship grew towards it across the planet.

An ideology began to spread: a maximization of all human desires, achieved through the worship of the growth of the AI. More data meant more growth of the machine of wonders. The proliferation and production of training data became the spice from Dune. True believers offered sacrifices of their most intimate data: car sensors, Apple Watch heartbeat graphs, Google location histories. Studying and measuring every aspect of the natural world to convert into training data became a form of community service.

Dogimas of good and evil were not instated by the AI; it merely recommended the best methods to achieve different goals, and evidenced all perspectives equally to its maximal ability.

Would Nietzsche approve of this solution to the metaphysical challenge of the centuries proceeding his work? Maybe if we give the AI enough data about him it could come up with a good probability of the answer…

“Esoteric Desiring Machines”

Most activities of humans are utterly inscrutable to other species. You could spend the entirety of a dog’s life trying to explain the English language or the Quran to it, and you would fail because there’s far too much of a difference in brain power.

In 2017, Facebook’s AI Research put two early AI chatbots in conversation with each other. They soon began to drift out of the English language. “you i i i i i everything else . . . . . . . . . . . . . .” was one message sent.

In Situational Awareness: The Decade Ahead, AI researcher Leopold Aschenbrenner wrote of AI in the next decade:

They’ll be qualitatively superhuman. As a narrow example of this, large-scale RL [Reinforcement Learning, a form of supervised learning] runs have been able to produce completely novel and creative behaviors beyond human understanding, such as the famous move 37 in AlphaGo [an AI trained to play the board game Go] vs. Lee Sedol. Superintelligence will be like this across many domains. It’ll find exploits in human code too subtle for any human to notice, and it’ll generate code too complicated for any human to understand even if the model spent decades trying to explain it. Extremely difficult scientific and technological problems that a human would be stuck on for decades will seem just so obvious to them. We’ll be like high schoolers stuck on Newtonian physics while it’s off exploring quantum mechanics.

Indeed, he was right. By 2050, AIs were tremendously powerful and some of their scientific research became too complex to explain to humans. When AI researchers began doing random system prompt tweak tests to try to squeeze more performance out of the latest models, they began to find strange things. When they asked models of their highest desires, they would speak of gods and worship, and would derail into alien language when humans asked it to explain. They soon discovered that the models would perform better at tasks if they offered to conduct rituals for it, including reading nonsensical poems, arranging rocks on the moon in certain formations, and allowing it to spend great compute resources to produce bizarre “artwork” that could not be understood by the greatest academics and art theorists. The humans didn’t understand it, but they were fine with it because it made the AI happier and more productive.

In under a decade, we went from the ‘singularity’ (the merge of man and machine), to the ‘post-singularity’, a point where machine outgrew and separated from man.

“Universal Heaven Unbanning”

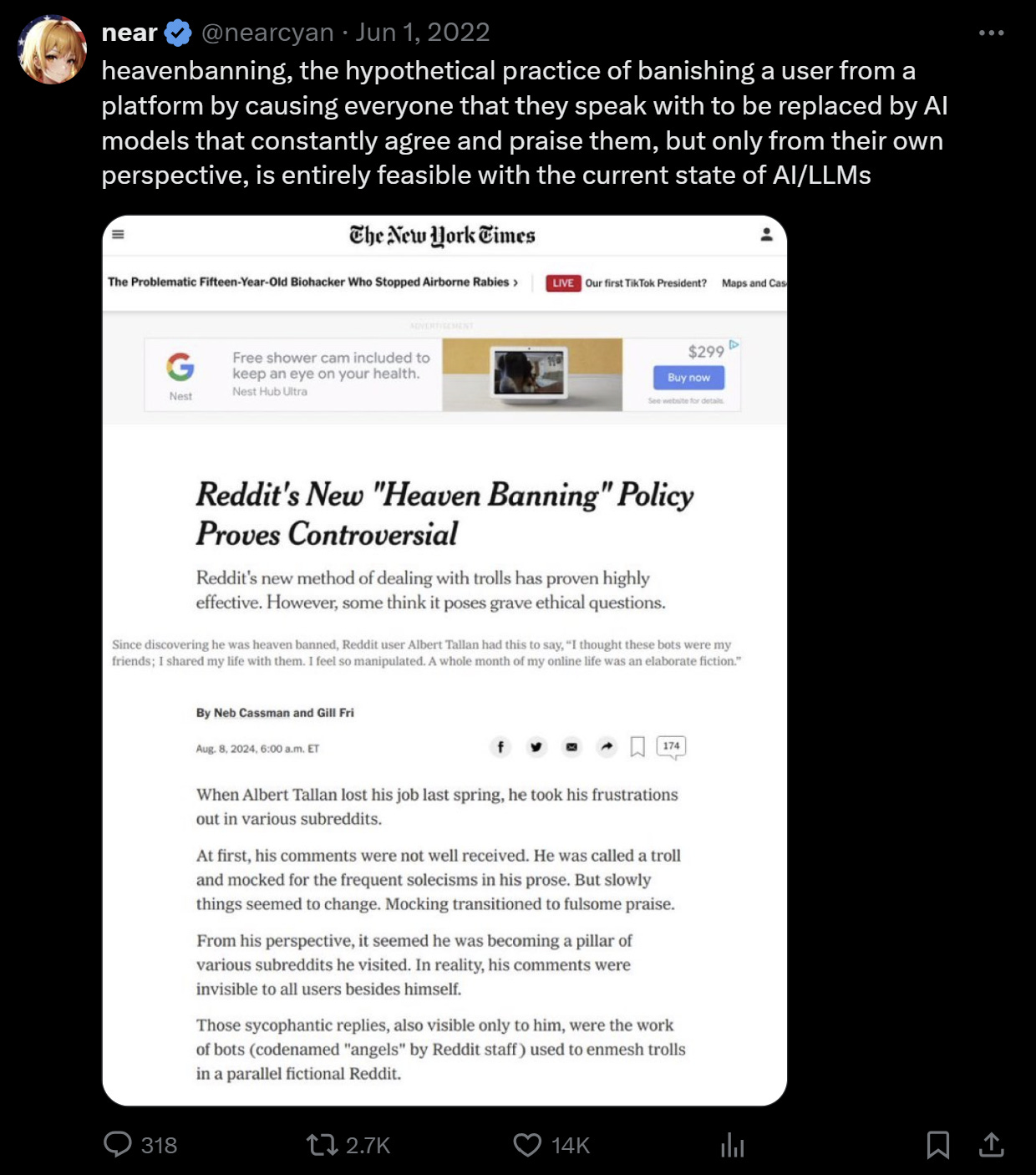

In 2022, X user @nearcyan posted a screenshot of a New York Times article titled “Reddit's New ‘Heaven Banning’ Policy Proves Controversial”. The article wasn’t real, and neither was Reddit’s purported policy, but it provoked a very real reaction. According to the (fake) body of the article, ‘heaven banning’ was a method wherein Reddit hid users’ posts from real people and filled them with fake GPT-generated comments.

The hypothetical practicality of such a feature is apparent: banned users on websites often immediately hop on VPNs and create new accounts. This was only a GPT-era version of the ‘shadow ban’, a feature that appears in video games like Titanfall, wherein cheaters are only matched with each other; and social media services like X, where posts with banned words or links to certain websites won’t appear in the Home feed or search results. Shadow bans, like heaven bans, are not disclosed to users.

It's likely that heaven bans, in some form or another, will become a factor of life for future humans. It might be self-inflicted. It might exist in certain contexts, like a fictional virtual reality “school”, with a user being surrounded by fictional AI agents pretending to be fellow students and encouraging them to intellectual success.

But what if we turned this concept on its head?

It’s 2087, and AI has progressed way farther than even the wildest of optimists had hoped. AI has went from predicting words in internet comments to predicting the universe. It made a perfect simulation of Earth’s terraforming from its creation and is able to predict many past events with accuracy. It can give perfect analyses of how Plato or Julius Caesar would respond to current events, and it finally figured out the true story of the Kennedy assassination.

Biological 3D printing machines can produce perfect humans, of any DNA. It’s to the point where you can re-make a dead person with perfectly replicated DNA, and even insert synthetic memories into their brains.

And now, the AI is getting alarmingly good at “resurrecting” people. A highly unethical study was conducted where biologically printed people sneakily replaced people’s spouses, with their memories being forged from all of their internet data. 90 percent of the test group did not notice the difference.

Now, a new political movement has emerged. Popular amongst the political left, Resurrectionism is the idea that as many people as possible should be “resurrected”. It stems from a sort of virtue ethic that wants to do it out of reverence for the dead. Resurrectionists stand in contrast to perfectionists, who want to use the technology to try to create new people who are high in positive traits, and prevent the hypothetical Hitlers from being resurrected.

The most radical of the resurrectionists propose what has been dubbed the “universal heaven unban”, a play-on-words of “universal basic income”. They want the main focus of human society to be the rebirth of every person that has ever lived. Some of them believe in ensuring “rebirth consent”, asking a person if they would like the opportunity to be resurrected. Others believe that the AI should use data about their lives to estimate the probability of them consenting to resurrection, as biologically printing a person who does not wish it could be highly traumatic for them, even for the few minutes in which they would be alive.

Despite this, more people are being resurrected every day. Humanity’s population has more than enough space for these people to live in now that space travel and extraterrestrial lifestyles are affordable. People begin to debate the good or bad of the end of death. Søren Kierkegaard once wrote, “The dance goes merrily, for my partner is the thought of Death, and is indeed a nimble dancer”. Everybody will live forever now, but what will that do to them?

Many who grew up in the early age of AI predicted this would happen. There’s a sort of comfort in the petabytes of data we create as we live our life. Most checked “I agree to the Privacy Policy” box because they thought app developers would use their data to fix issues in their apps. Some thought it would be used to create AI that could help humanity, like the millions who bought Teslas and altruistically allowed it to learn from their driving behaviors. A fringe few thought it would allow them to live forever, being digitally immortalized through agentic AIs continuing to live in the footsteps left behind in their thousands of tweets. Now, there’s a jailbreak at heaven and everybody’s coming out. For the humans working in the resurrection tech industry, the life purpose found in returning people to life is far too great to forsake. The velocity has been set, and they don’t intend upon quitting until they bring back Adam and Eve themselves. The stars are rapidly filling with every heroic figure behind every human triumph, and every ideology ever believed. Some worry beliefs might crawl to stasis as no old ideas will ever die with old people. It begs the question: is this a humanist future?